As widely expected, the 2013 Nobel Prize in Physics was awarded for the Higgs boson. The committee chose to award only the theoretical prediction, omitting the experimental teams at CERN to the annoyance of some. Nobel tradition notwithstanding, apparently there were no strict rules preventing the inclusion of CERN as an organization for the physics prize, which would indeed better reflect how fundamental science is done these days. Admittedly, the Nobel committee gave a very visible nod to the experimentalist in awarding the prize

As widely expected, the 2013 Nobel Prize in Physics was awarded for the Higgs boson. The committee chose to award only the theoretical prediction, omitting the experimental teams at CERN to the annoyance of some. Nobel tradition notwithstanding, apparently there were no strict rules preventing the inclusion of CERN as an organization for the physics prize, which would indeed better reflect how fundamental science is done these days. Admittedly, the Nobel committee gave a very visible nod to the experimentalist in awarding the prize

for the theoretical discovery of a mechanism that contributes to our understanding of the origin of mass of subatomic particles, and which recently was confirmed through the discovery of the predicted fundamental particle, by the ATLAS and CMS experiments at CERN’s Large Hadron Collider.

As always, Nature News and Ars Technica have good stories covering the prize, and there's also extensive reporting by the BBC. However, even awarding theoreticians for this discovery was tricky; as the Nobel committee puts it, the award was for the "Brout-Englert-Higgs (BEH)-mechanism", with only François Englert and Peter Higgs (both in their 80s) sharing the prize since Robert Brout deceased in 2011. Some have argued that very important earlier contributions from Anderson should had been recognized, as well as independent but slightly later work by Kibble, Guralnik and Hagen. As Nature News puts it:

In 1964, six physicists independently worked out how a field would resolve the problem. Robert Brout (who died in 2011) and Englert were the first to publish, in August 1964, followed three weeks later by Higgs — the only author, at the time, to allude to the heavy boson that the theory implied. Tom Kibble, Gerald Guralnik and Carl Hagen followed. “Almost nobody paid any attention,” says Ellis — mostly because physicists were unsure how to make calculations using such theories. It was only after 1971, when Gerard ’t Hooft sorted out the mathematics, that citations started shooting up and the quest for the Higgs began in earnest.

So numerous were the theorists involved, that Higgs reputedly referred to the ABEGHHK’tH (Anderson–Brout–Englert–Guralnik–Hagen–Higgs–Kibble–’t Hooft) mechanism.

...but I guess the ABEGHHK'tH-mechanism just doesn't roll off the tongue as "Higgs" or "BEH" :)

For additional context on the theoretical developments, see this post on the LHC's Quantum Diaries blog. However, if you have any knowledge in quantum theory, I would most warmly recommend putting in the effort to read the Scientific Background from the Nobel committee, which describes the preceding and parallel developments, and later significance and discovery, in great detail and as clearly as can reasonably be expected, giving full credit where it is due. The committee even visibly acknowledge the role of the US in the discovery, which some felt needed to be explicitly defended. Of course, the Popular information document is more accessible, and very well written.

In the end, despite the potential for controversy, the decision seems to be reasonably well received, with Hagen showing the most emotion:

"Regarding the committee’s choice, “I think in all honesty, this is what I would have done,” says John Ellis, a theoretical physicist at CERN, Europe’s particle-physics lab near Geneva, Switzerland." (Nature News)

"The whole of CERN was elated today to learn that the Nobel Prize for Physics had been awarded this year to Professors François Englert and Peter Higgs for their theoretical work on what is now known as the Brout-Englert-Higgs mechanism." (Pauline Gagnon via Quantum Diaries)

"The discovery of the Higgs boson at Cern... marks the culmination of decades of intellectual effort by many people around the world." (Rolf Hauer via the BBC)

"My two collaborators, Gerald Guralnik and Carl Richard Hagen, and I contributed to that discovery, but our paper was unquestionably the last of the three to be published in Physical Review Letters in 1964 (though we naturally regard our treatment as the most thorough and complete) and it is therefore no surprise that the Swedish Academy felt unable to include us, constrained as they are by a self-imposed rule that the prize cannot be shared by more than three people. My sincere congratulations go to the two prize winners, Francois Englert and Peter Higgs." (Tom Kibble via BBC News)

“Faced with a choice between their rulebook and an evenhanded judgment, the Swedes chose the rulebook,” Hagen said in a blunt e-mail shortly thereafter. “Not a graceful concession by any means, but that department has never been my strong suit.” (Robert Hagen via the Washington Post)

“It stings a little,” Guralnik said. But he added: “All in all, it’s a great day for science. I’m really proud to have been associated with this work that has turned out to be so important.” (Gerard Guralnik via the Washington Post)

To wrap up, Ken Bloom via LHC's Quantum Diaries blog offers a very practical perspective of science in the trenches:

I suppose that my grandchildren might ask me, “Where were you when the Nobel Prize for the Higgs boson was announced?” I was at CERN, where the boson was discovered, thus giving the observational support required for the prize. And was I in the atrium of Building 40, where CERN Director General Rolf Heuer and hundreds of physicists had gathered to watch the broadcast of the announcement? Well no; I was in a small, stuffy conference room with about twenty other people.

[...]

So in the end, today was just another day at the office — where we did the same things we’ve been doing for years to make this Nobel Prize possible, and are laying the groundwork for the next one.

As widely expected, the 2013 Nobel Prize in Physics was awarded for the Higgs boson. The committee chose to award only the theoretical prediction, omitting the experimental teams at CERN to the

As widely expected, the 2013 Nobel Prize in Physics was awarded for the Higgs boson. The committee chose to award only the theoretical prediction, omitting the experimental teams at CERN to the

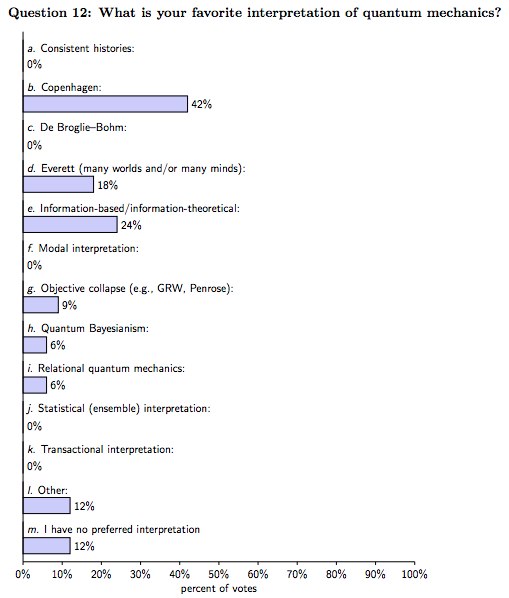

The answer seems to be: NO.

The answer seems to be: NO.